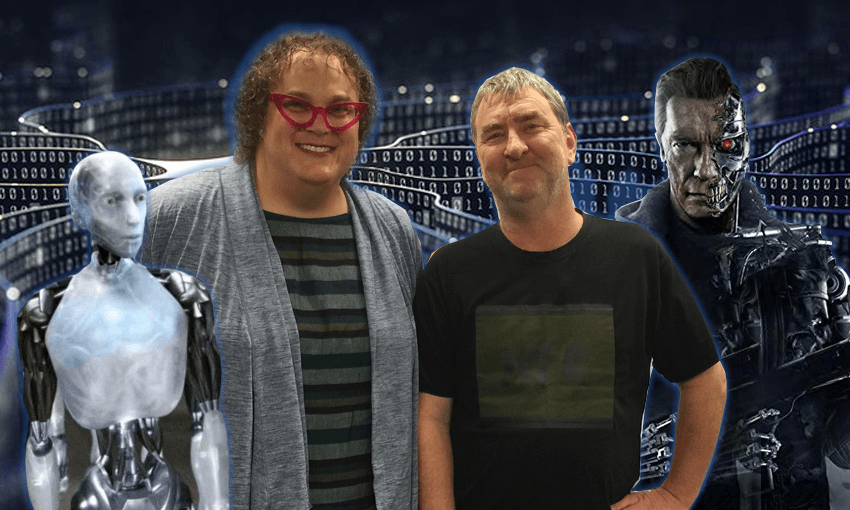

In the fourth episode of Actually Interesting, The Spinoff’s monthly podcast exploring the effect AI has on our lives, Russell Brown speaks to Ana Arriola, general manager and partner at Microsoft AI and Research, about ethics and transparency in tech.

Subscribe to Actually Interesting via iTunes or listen on the player below. To download this episode right click and save.

Ana Arriola’s talk at the recent Future of the Future conference – about intersectionality, surveillance capitalism and the risks of AI – was not the stuff of your Dad’s (or Steve Ballmer’s) Microsoft. Her speech provided reflection on the way Microsoft thinks now. In recent years, the company has devoted attention and resources to contemplating both the power of its technologies and ways to ensure they help rather than harm.

Most notably, there’s FATE – for Fairness, Accountability, Transparency and Ethics – a Microsoft Research group set up to “study the complex social implications” of AI and related technologies and match those against the lessons of history. This year, FATE published a thoughtful paper on designing AI so it works for people with disabilities, and another on fairness in machine learning systems, which observes bluntly that the problem starts in the way datasets on which ML systems are trained are curated. The same paper points out that AI design teams often don’t know their systems are biased until they’re publicly deployed and, to quote one software engineer, “someone raises hell online”.

There’s also the company’s advisory board, AI Ethics and Effects in Engineering and Research (Aether), which last year published a set of six principles for any work on facial recognition – and whose advice has apparently already led Microsoft to turn down significant AI product sales over ethics concerns. The company also publishes a general set of ethical principles for AI.

Arriola – whose full job title is general manager and partner, AI + research and search – has established another group within the company called ETCH ( Ethics, Transparency, Culture and Humanity). It’s evident that Microsoft takes this stuff seriously and that it’s about more than simply aiming for diversity in recruitment.

“So much more,” Arriola told me a couple the day before her talk at the seminar. “Diversity and inclusion just means making sure that there’s safety and security within any given organisation, but it’s really about global intersectionality.

“It doesn’t matter what race you are, what gender you are, what expression you are, what access or privilege you’re coming from, [it’s] making sure that everything is fair and equitable, so that people are never harmed or hurt within the context of technology such as artificial intelligence, or the context of just being in their environment, whether it’s work or home or in civil society.

“There’s a lot of unconscious bias in the world. And now we’re having to really radically confront this and work side by side with our own indigenous populations and under-represented minorities, with the mainstream population to understand that the way that you’re used to doing things is not the right way any more.”

Arriola represents another change in the way information systems and products are developed – a broadening of the concept of design itself.

“Over the last 15 plus years, this role that was disparate – it was like information architect, graphic designer, design engineer – has now all come together into this discipline called product design.”

She began her own working career in the visual field as a cartoon animator and spent time at Apple (“the Fruit Company”, she now calls it), where the original Human Interface Guidelines were holy writ. She believes the Apple guidelines are “still today one of the pinnacles of platform design.”

She says Microsoft’s Guidelines for Human-AI Interaction have recently been acknowledged in a similar set of guidelines published by Google.

The question of whether the principle that good design should be invisible to the user is complicated here. We should know in a broader sense that AI is at work, but “it should be transparent to you – you should also have the faith and know that what you’re interfacing with is fair and is unbiased, and is not going to be harmful or hurtful,” she says.

Search – and its AI-driven sibling recommendation – are good examples of things that just seem to work without us being aware of the AI going on in the background. But, as I pointed out here on The Spinoff in the wake of the Christchurch mosque massacre, we also have real-world examples of those same features, most notably within YouTube, radicalising us in pursuit of clicks and engagement.

“It actually could be other things. Bad actors in the world are using these things called data voids. Imagine they’re planning to do something and they place this created data, which could be misinformation or an unfortunate perspective on some sort of event. And the way you access it, it won’t just show up normally if you do a search, but if you go out and start protesting or you’re doing activities and use hashtags, that will trick people into doing a search on it, and searching on it will up-level it – almost like hatch it.

“And when that happens, and it does happen, we’re immediately on top of it. It’s challenging – it can a be a little bit like whack-a-mole. But it’s vigilance, it’s tenacity on our part, just being relentless and making sure we’re on top of these things as they come up. Knowing that we’re working on your side and we’re in service of humanity with everything we do.”

Whether Arriola and her colleagues succeed in developing products that make the world better remains to be seen. But it’s certainly encouraging to see a giant company looking to lead other giants into not making the world worse.

*Ana Arriola’s visit to New Zealand was supported by Semi Permanent and Future of the Future.

This content was created in paid partnership with Microsoft. Learn more about our partnerships here.