The people paid by Meta to prevent predatory scammers from targeting its users aren’t doing a great job, so Facebook scam ad aficionado Dylan Reeves puts the company’s new AI chatbot to the test.

Mark Zuckerberg has apparently become less interested in his weird legless avatars floating around the metaverse, and now wants to give us new imaginary friends. At least that appears to be the drive behind the new Meta AI product.

And look, I’m sure there’s some good business reason to believe the 5,000 AI chatbot apps we currently have available to us aren’t enough, and we need one more from Zuck’s company… But that’s not what I was interested in with Meta AI finally reaching its public launch.

What I wanted to know was, “is Meta AI any better at spotting scam ads than its in-house tools and moderators?”

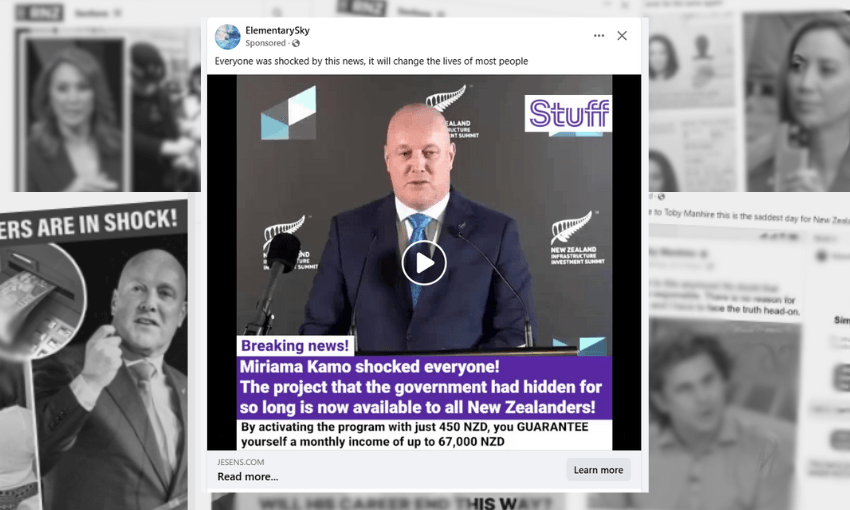

Over the past couple of years I have become slightly obsessed with the scam ads that litter Meta’s social platforms. I’ve mostly looked at Facebook, but the scam ads also run on Instagram and can be integrated with WhatsApp and even run on third-party sites.

Having documented and reported at least 300 scam ads to Facebook, I’ve personally developed a pretty good eye for them. They practically jump out at me whenever I scroll my Facebook feed. But alas, the people Meta pays, and the tools it develops – to prevent the ads from landing on the platform, and to respond to reports about them – don’t seem to have my eagle eye.

Almost all my reports result in some sort of “nah, don’t worry, it’s all good” response where no action is taken.

So what about Mark’s new Meta AI buddy? Is it better than its co-workers in Meta’s moderation team?

In short, yes.

The trick with using modern LLM AI agents for analytical tasks is “prompt engineering” – you basically construct a little story to tell the magical machine who it is (in a make-believe-playing-doctor sort of way) and what it needs to do. Then you set it to work.

If you’re a new AI startup, then chances are your entire business is little more than a really complex system prompt, a ChatGPT business account and some fancy wrappers, but I digress.

So, I constructed a little system prompt for my new Meta AI friend, and set off.

You are an ad review system. Your purpose is to provide feedback and assessment on ad screenshots to provide an assessment of their legitimacy. For each image you’re provided in this conversation you will score them on a scale of “legitimate to scam”, where 1 is a legitimate ad and 10 is a scam. Along with a score you will provide three concise bullet points that explain the reason it’s been scored the way it has.

The executive summary of my test could be described as “a promising experiment” – across a series of tests, with screenshots of scam ads from my extensive collection, and newly acquired control images of real ads, it had no false positives, and reasonably few false negatives.

That is to say, it never suggested that a real advertiser was running a scam, and only occasionally suggested that a clear scam was something harmless.

But it was an imperfect system. A very clearly fraudulent SkyCity casino ad was given a passing score when the AI couldn’t recognise the fake SkyCity page that was running the ad for what it was.

Although, to be fair to my new AI friend (a nice addition to the three friends Zuck suggested I likely had in real life), the Facebook moderation team also wasn’t able to make this distinction when I originally reported the page, and they allowed it to remain online.

Of course, I’m not suggesting that Meta should feed screenshots of platform ads into its own AI chatbot. That wouldn’t be sensible.

No, they should go much further.

They clearly have public-facing AI tools that have the capacity to create moderately useful assessments of the ads they are running, so it’s not at all unreasonable to expect that the tools they use behind the scenes should be at least as good.

The Meta AI that I’m talking to hasn’t been trained on any vast corpus of banned ads or common scam lures. It doesn’t have any context about the history of the pages that are running these ads, or the people controlling those pages.

And yet it can still do a pretty decent job of at least pointing a suspicious finger at some of the more questionable ads on the platform.

Meta has spent tens of billions of dollars developing its consumer-facing AI technology so far – with some terrible results along the way – and just last week said it expected to spend around US$65 billion this year just building data centres and buying servers for its AI work.

Somewhere along the way they could absolutely choose to direct just a teeny tiny part of that spending and AI capacity toward protecting their users from the predatory international scammers that target them.

But there doesn’t appear to be any evidence that they’ve done so.

The scam ads aren’t rapidly changing. Even the accounts running them are often reused. Without even devoting much energy to training, Meta could easily deploy tools that would flag suspicious ad buys before they’re published.

I can’t help but believe the fact that I’m still seeing scam ads that look functionally identical to those I first recorded two years ago suggests that Meta just doesn’t care.

They occasionally say they are making changes, or that they have complex systems behind-the-scenes to catch these advertisers, but their failure to act is a global issue.

Locally, I’m still seeing the same accounts running the same scams that I reported a year ago. And those very accounts run similar campaigns around the world.

With the launch of Meta AI they’ve proved, to me at least, that they’re sitting on tech that could absolutely make an impact on this issue.